Snowflake Launches Document AI (It Looks Quite Handy)

Roundup #3

Hello data folks 👋

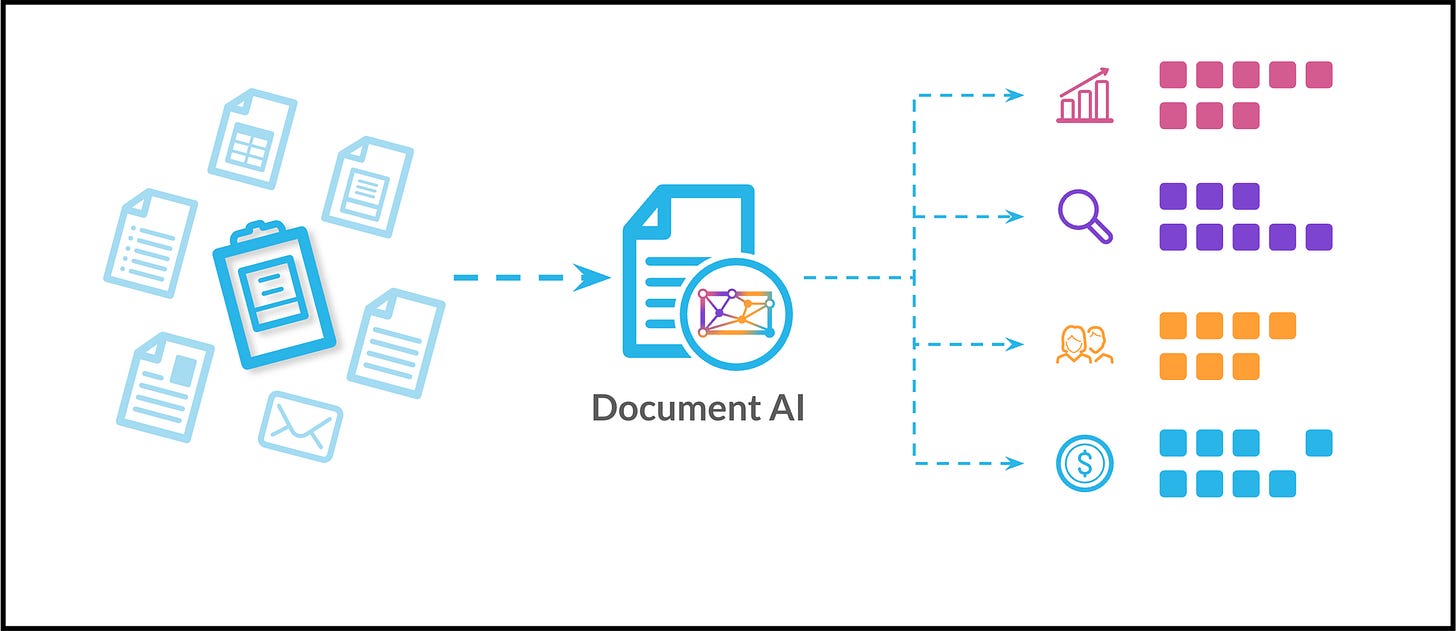

Snowflake just launched new AI document processing features inside Cortex AI:

AI_EXTRACT function: powered by its Arctic-Extract proprietary model supporting 29 languages

PARSE_DOCUMENT LAYOUT mode: for complex multimodal documents

Automated Table Extraction: from structured documents like financial reports

The platform processes 10+ document formats within Snowflake's native environment, eliminating the need for separate document processing infrastructure. According to Snowflake (😉), Arctic-Extract outperformed competitors on DocVQA benchmarks while offering SQL-native implementation for enterprise workflows.

If you’re on Snowflake, these are really cool new features that could help you consolidate your document processing workloads into your existing Snowflake environment. Reduce infrastructure complexity and improve governance of your unstructured data pipelines at the same time!

Source: Snowflake blog

SAP Pours More Than $23B Into EU Sovereign Cloud Buildout

CIO Dive | September 2, 2025 | 3 minute read

SAP announced a $23.3 billion investment to expand its sovereign cloud infrastructure across Europe. The goal is to offer data residency controls through regional data centers and on-premises deployments.

The move follows similar sovereign cloud launches by AWS, Microsoft, and Google Cloud because enterprises are scrambling to reach data sovereignty. Why? Much stricter EU AI Act compliance requirements are coming next year to bite hard. SAP positions itself as the only vendor offering sovereignty “across the entire stack from infrastructure to application.”

If you deal with EU customers or users, you should check your current cloud architecture for regulatory compliance gaps. Consider sovereign cloud options for sensitive workloads ahead of the EU AI Act implementation.

Why Every Data Team Struggles With Ingestion Tools (and the 5 Critical Problems No Vendor Solves)

Moez Khan | September 2, 2025 | 6 minute read

The author’s experiments show systematic failures across major data ingestion tools including Fivetran, Airbyte, and Matillion. All vendors struggle with identical problems despite competing on different features.

The author’s tests revealed five critical gaps:

JSON handling forces impossible choices between having tables with a ridiculous number of columns or text blobs

Schema evolution breaks pipelines when APIs change

Enterprise features add complexity for basic batch processing needs

JSON-to-SQL translation creates lossy compromises

Vendor demos consistently fail to match production reality.

Most painful finding: teams pay premium licensing for "zero-maintenance" tools, then hire specialists to work around vendor-specific limitations. The takeaway: always test the tools against actual operational needs rather than believing demo performance.

DuckDB Beyond Analytics: Using It as a Feature Store

Thinking Loop | September 2, 2025 | 4 minute read

DuckDB can be an alternative feature store for ML pipelines, moving beyond its traditional analytics role. The embedded database's columnar storage, SQL-native interface, and seamless integration with Parquet/Arrow make it viable for feature management without infrastructure overhead.

DuckDB can handle billions of rows locally while eliminating the complexity of distributed feature stores like Feast or Tecton. If for example, you’re working on fraud detection and batch inference workflows, you could have features computed and served through standard SQL queries.

The hybrid approach is probably the most practical: DuckDB for training and batch inference, with selective promotion to Redis/specialized stores for real-time serving when millisecond latency is required.

If your team spends more time on feature store infrastructure than model development, you should give DuckDB a try. Particularly relevant for startups and prototyping phases where traditional feature stores create complexity you probably don’t need.

One More Thing(s)

As an experienced DE what things you wish you had knew earlier (Reddit)

I've rarely come across a concept that needs as many analogies as Data Governance (LinkedIn)

That’s the brief.